[A, SfS] Chapter 2: Probability: 2.3: Conditional Probability and Independence

Conditional Probability and Independence

Conditional Probability and Independence

Conditional Probability and Independence

In this lesson you will learn:

- What a conditional probability is

- What dependent and independent events are

- The correct notation for conditional probability

- How to compute a conditional probability

- How to determine whether two events are independent

- How to compute a joint probability for two independent events

- How to use Bayes’ rule to compute prior probabilities

#\text{}#

Conditional Probability

The conditional probability of some event #A#, given some other event #B#, is the probability of event #A# occurring if it is given that event #B# occurs. This is denoted #\mathbb{P}(A \ | \ B)#.

#\text{}#

Dependence

Event #A# is dependent on #B# if the conditional probability of event #A# given event #B# is different from the (unconditional) probability of event #A#, i.e., if:

\[\mathbb{P}(A\ | \ B) \neq \mathbb{P}(A)\] In this situation it is also true that: \[\mathbb{P}(B\ |\ A) \neq \mathbb{P}(B)\]

Interpreting Dependence

When two events are dependent on each other, this means that knowing that one of the events occurred makes the other event either more or less likely to occur.

#\text{}#

Independence

Event #A# is independent of #B# if the conditional probability of event #A# given event #B# is equal to the (unconditional) probability of event #A#, i.e., if:

\[\mathbb{P}(A\ |\ B) = \mathbb{P}(A)\] In this situation it is also true that: \[\mathbb{P}(B\ |\ A) = \mathbb{P}(B)\]

Interpreting Independence

When two events are independent of each other, this means that knowing that one of the events occurred does not make the other event either more or less likely to occur.

If you already know that event #B# occurs, then the sample space is reduced. For example, if you are rolling a pair of dice (one #blue# and one #red#), the sample space consists of the #36# ordered pairs #(x,y)# when #\red{x}# is the number showing on the #\red{red}# die and #\blue{y}# is the number on the #\blue{blue}# die (each die can have any of the integers #1# through #6# showing).

Suppose event #A# is the event that #\red{x} +\blue{y} = 8#. Of the #36# ordered pairs, only #5# pairs #(\red{2},\blue{6}),(\red{3},\blue{5})(\red{4},\blue{4}),(\red{5},\blue{3}),(\red{6},\blue{2})# comprise event #A#.

So #\mathbb{P}(A) = \cfrac{5}{36}#.

But now suppose we are given that event #B# occurs, where event #B# is the event that #y# is an odd integer.

Now the sample space is cut in half, from #36# pairs to the #18# pairs for which #y# is odd. The #18# outcomes comprising this new sample space are precisely the outcomes comprising event #B#.

Further, only #2# of the #5# outcomes comprising event #A# are now possible, that is, only the outcomes in #A\cap B#, which are #(\red{3},\blue{5})# and #(\red{5},\blue{3})#.

So #\mathbb{P}(A\ |\ B) = \cfrac{2}{18} = \cfrac{4}{36}#.

Since #\mathbb{P}(A\ |\ B) \neq \mathbb{P}(A)# we can conclude that #A# and #B# are dependent events. Knowing that #y# is odd makes the event #x + y = 8# a little less likely to occur.

In the example above, we computed #\mathbb{P}(A\ |\ B)# by dividing the number of outcomes in #A\cap B# by the number of outcomes in #B#.

We would get the same result if we divided #\mathbb{P}(A\cap B)# by #\mathbb{P}(B)#:

\[\cfrac{\mathbb{P}(A\cap B)}{\mathbb{P}(B)} = \cfrac{\cfrac{2}{36}}{\cfrac{18}{36}} = \cfrac{2}{18}\]

#\text{}#

Conditional Probability Formula

This gives us the general formula for computing a conditional probability:

\[\mathbb{P}(A\ |\ B) = \cfrac{\mathbb{P}(A \cap B)}{\mathbb{P}(B)}\]

assuming, of course, that #\mathbb{P}(B) > 0#.

#\text{}#

Joint Probability of Dependent Events

If we rearrange the formula for computing conditional probabilities, we have a formula for computing the joint probability of two events (i.e., the probability of their intersection):

\[\mathbb{P}(A \cap B) = \mathbb{P}(A\ |\ B)\mathbb{P}(B)\]

Equivalently, we could derive the formula

\[\mathbb{P}(A \cap B) = \mathbb{P}(B\ |\ A)\mathbb{P}(A)\] in the same way.

#\text{}#

Joint Probability of Independent Events

We have shown that

\[\mathbb{P}(A \cap B) = \mathbb{P}(A\ |\ B)\mathbb{P}(B) = \mathbb{P}(B\ |\ A)\mathbb{P}(A)\]

But if #A# and #B# are independent, then

\[\mathbb{P}(A\ |\ B) = \mathbb{P}(A)\] and \[\mathbb{P}(B\ |\ A) = \mathbb{P}(B)\] This means that, if we already know that #A# and #B# are independent, then the formula for the joint probability of #A# and #B# is:

\[\mathbb{P}(A \cap B) = \mathbb{P}(A)\mathbb{P}(B)\]

For example, suppose a security system has two components that work independently. The system will fail if and only if both components fail.

Let #A# denote the event that the first component fails and let #B# denote the event that the second component fails. Thus #A\cap B# denotes the event that the system fails.

Suppose #\mathbb{P}(A) = 0.13#, and #\mathbb{P}(B) = 0.09#. What is the probability the system will fail?

Since the components are independent, the joint probability of event #A# and #B# is:

\[\mathbb{P}(A \cap B) = \mathbb{P}(A)\mathbb{P}(B) = 0.13 \cdot 0.09 = 0.117\]

#\text{}#

Joint Probability of Multiple Independent Events

The rule for the joint probability of independent events can be extended to any finite collection of events #A_1,A_2,...,A_n#.

If these #n# events are mutually independent, then

\[\mathbb{P}(A_1 \cap A_2 \cap \cdot\cdot\cdot \cap A_n) = \mathbb{P}(A_1)\mathbb{P}(A_2)\cdot\cdot\cdot \mathbb{P}(A_n)\] Likewise, if this formula is true both for the entire collection and for every possible subset of that collection, then we can conclude that the #n# events are mutually independent.

This formula will come in very handy for statistics. If we observe some variable measured on a random sample of #n# elements, we can assume that the measurements are independent, which will allow us to replace the probability of an intersection of events across all elements with the product of the probabilities of the individual events per element.

For example, a random sample of #5# children selected from a large population is tested for dyslexia. If #6\%# of the children in that population have dyslexia, what is the probability that none of the children in the sample have dyslexia?

First, let #A_i# denote that the event that the #i#th randomly-selected child does not have dyslexia. The probability of event #A_i# occurring is:

\[\mathbb{P}(A_i) = 1 - 0.06 = 0.94\] We are asked for the joint probability #\mathbb{P}(A_1 \cap A_2 \cap A_3 \cap A_4 \cap A_5)#.

But since the sample is random, we have independent events, so this joint probability becomes:

\[\mathbb{P}(A_1)\mathbb{P}(A_2)\mathbb{P}(A_3)\mathbb{P}(A_4)\mathbb{P}(A_5) = 0.94^5 \approx 0.734\] So there is a #26.6\%# probability that at least #1# of the #5# students has dyslexia.

#\text{}#

We previously mentioned the Law of Total Probability when there are two events #A# and #B#:

\[\mathbb{P}(B) = \mathbb{P}(B \cap A) + \mathbb{P}\big(B \cap A^C\big)\]

Using conditional probability, this formula becomes:

\[\mathbb{P}(B) = \mathbb{P}(B\ |\ A)\mathbb{P}(A) + \mathbb{P}\big(B\ |\ A^C\big)\mathbb{P}\big(A^C\big)\]

Of course we can exchange the #A#’s and the #B#’s here, since the labeling is arbitrary.

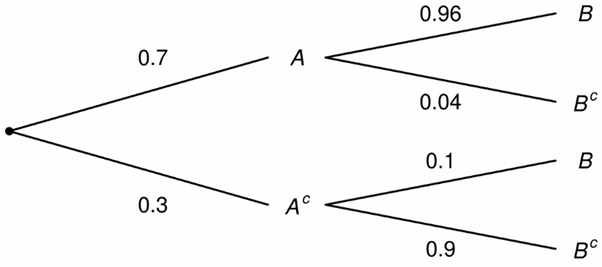

Consider the following probability tree:

This tree shows that event #A# can occur with probability #0.7#, and thus fail to occur with probability #0.3#. Given that event #A# has occurred, event #B# can occur with probability #0.96# (and fail to occur with probability #0.04#). But given that event #A# has failed to occur, event #B# can occur with probability #0.1# (and fail to occur with probability #0.9#).

The tree diagram does not show us the probability that event #B# will occur, only the probability of event #B# under two different conditions (#A# occurring or #A# not occurring).

But we can use the Law of Total Probability to compute #\mathbb{P}(B):#

\[\mathbb{P}(B) = \mathbb{P}(B\ |\ A)\mathbb{P}(A) + \mathbb{P}\big(B\ |\ A^C)\mathbb{P}(A^C) = (0.96)(0.7) + (0.1)(0.3) = 0.702\]

We can generalize this Law to a situation in which the entire sample space #S# of an experiment can be partitioned into #n# mutually-exclusive events #A_1,A_2,...,A_n#.

This means that #S = A_1 \cup A_2 \cup \cdot\cdot\cdot \cup A_n# and #A_i \cap A_j = \varnothing# for each pair of indices #i# and #j#. In this case the tree diagram has #n# branches on the left side rather than #2# branches.

General form of the Law of Total Probability

Let #A_1, ..., A_n# be a partition of the sample space for some experiment.

Then given any event #B# for the same experiment, the general form of the Law of Total Probability is:

\[\mathbb{P}(B) = \sum_{i=1}^n \mathbb{P}\big(B\ |\ A_i\big)\mathbb{P}\big(A_i\big)\]

#\text{}#

We might have an experiment that can be thought of as having two stages. At the first stage either event #A# occurs or event #A# does not occur. At the second stage either event #B# occurs or event #B# does not occur.

There can be situations in which you know the outcome of the second stage, and based on that knowledge you would like to know the probability of either outcome of the first stage.

Prior Probability

In other words, based on an observed effect, you might want to know the probability of a proposed cause. We call this a prior probability.

Suppose we want the prior probability of event #A#, given that we have observed event #B#, i.e., we want:

\[\mathbb{P}(A\ |\ B)\] We know the formula:

\[\mathbb{P}(A\ |\ B) = \cfrac{\mathbb{P}(A \cap B)}{\mathbb{P}(B)}\]

We can use the Law of Total Probability to replace #\mathbb{P}(B)# with #\mathbb{P}(B\ |\ A)\mathbb{P}(A) + \mathbb{P}\big(B\ |\ A^C\big)\mathbb{P}\big(A^C\big)#.

We can also replace the joint probability #\mathbb{P}(A\cap B)# with either #\mathbb{P}(A\ |\ B)\mathbb{P}(B)# or #\mathbb{P}(B\ |\ A)\mathbb{P}(A)#.

But since we are trying to compute #\mathbb{P}(A\ |\ B)# we obviously don't already have that information, so we go with the second option.

Bayes' Rule

This gives the formula for the prior probability of #A# given #B#:

\[\mathbb{P}(A\ |\ B) = \cfrac{\mathbb{P}(B\ |\ A)\mathbb{P}(A)}{\mathbb{P}(B\ |\ A)\mathbb{P}(A) + \mathbb{P}\big(B\ |\ A^C\big)\mathbb{P}\big(A^C\big)}\]

This formula is called Bayes’ Rule, named for Thomas Bayes, an English statistician, philosopher and Presbyterian minister from the 18th century.

Generalized Bayes' Rule

We can generalize Bayes’ Rule to the situation in which the entire sample space #S# of an experiment can be partitioned into #n# mutually-exclusive events #A_1,A_2,...,A_n#.

Then the prior probability of event #A_k# given the event #B# has occurred is:

\[\mathbb{P}(A_k\ |\ B) = \cfrac{\mathbb{P}(B\ |\ A_k)\mathbb{P}(A_k)}{\sum^n_{i=1}\mathbb{P}(B\ |\ A_i)\mathbb{P}(A_i)}\]

Suppose #3\%# of passengers who take the ferry from country #U# to country #W# are attempting to smuggle cocaine in their luggage. Dogs at the border are specially trained to detect cocaine in luggage.

If there is indeed cocaine in a bag, there is a probability of #0.90# they will detect it. However, if there is no cocaine in a bag, there is a probability of #0.05# that the dog will mistakenly detect cocaine.

A randomly-selected passenger is checked and the dogs indicate that his luggage contains cocaine. What is the probability that the dogs are correct?

Let #A# denote the event that the passenger's luggage contains cocaine. From the given information we know #\mathbb{P}(A) = 0.03#, since #3\%# of the passengers are smuggling cocaine. Thus #\mathbb{P}\big(A^C\big) = 0.97#.

Let #B# denote the event that the dogs detect cocaine in the luggage. From the given information we know #\mathbb{P}(B\ |\ A) = 0.90# and #\mathbb{P}\big(B\ |\ A^C\big) = 0.05#.

We want to know #\mathbb{P}(A\ |\ B)#. Using Bayes’s Rule:

\[\begin{array}{rcl}

\mathbb{P}(A\ |\ B) &=& \cfrac{\mathbb{P}(B\ |\ A)\mathbb{P}(A)}{\mathbb{P}(B\ |\ A)\mathbb{P}(A) + \mathbb{P}\big(B\ |\ A^C\big)\mathbb{P}\big(A^C\big)} =\cfrac{(0.90)(0.03)}{(0.90)(0.03) + (0.05)(0.97)}\\

&=& \cfrac{0.027}{0.027 + 0.0485} = \cfrac{0.027}{0.0755} \approx 0.358

\end{array}\]

Surprisingly, there is about a #2# out of #3# probability that the dogs are mistaken!

Or visit omptest.org if jou are taking an OMPT exam.